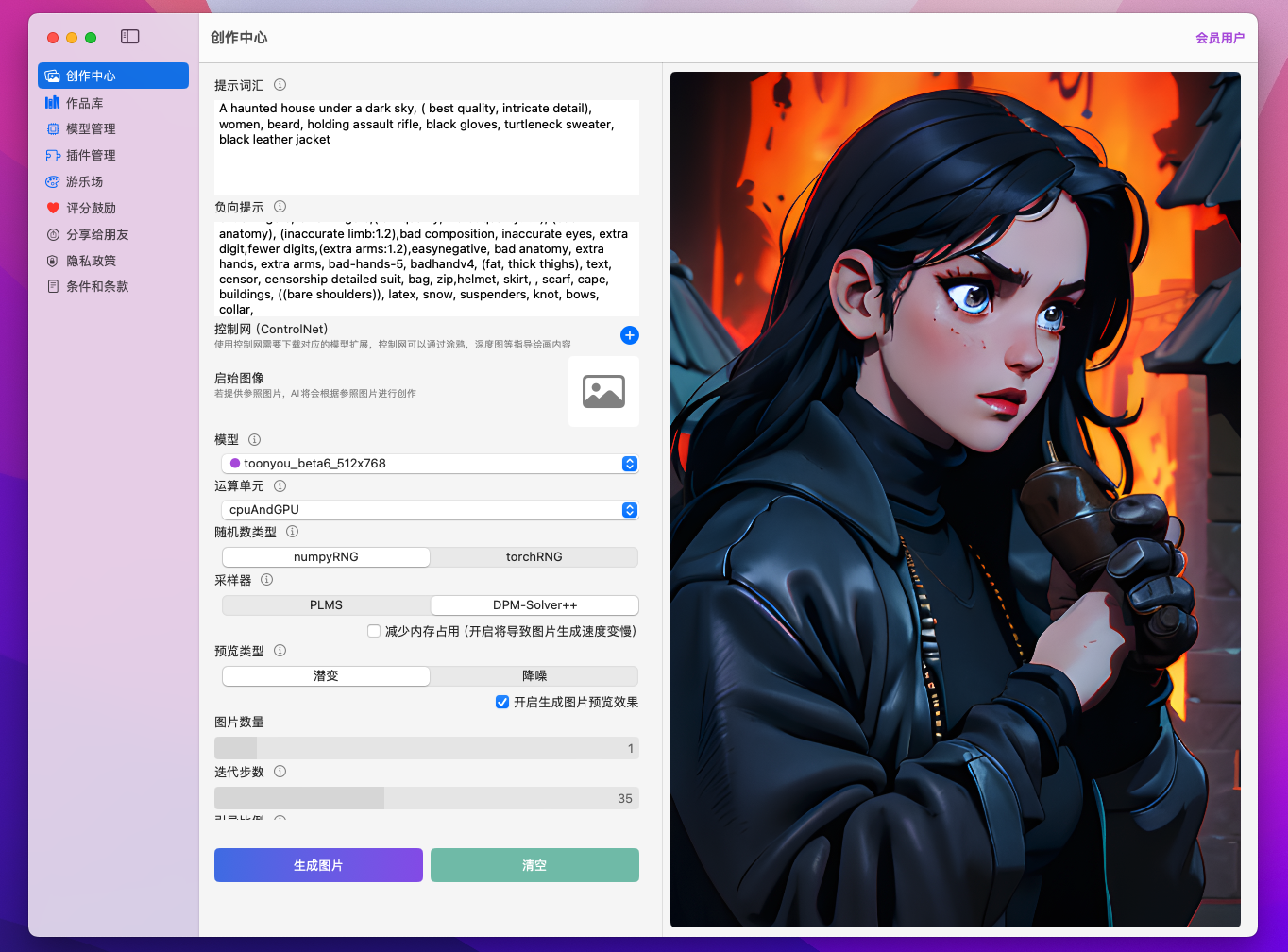

Creation Center

All image creation takes place within the Creation Center interface, where there are numerous parameters that can be configured. Don't worry, as you gain a deeper understanding of AI artistry, you'll become adept at configuring them, discovering your workflow and personal preferences. Find patterns within intricate settings.

Parameter Explanations

Prompt

Prompt words are a piece of textual description used to depict the content of the generated image. The AI will create based on your prompt words. Please try to use English prompt words whenever possible (currently, English descriptions yield better results). Different AI models might interpret prompt words differently. You can explore model introductions before switching models.

ControlNet

By providing preprocessed images with special effects styles, you can make the generated images from the AI more controllable. The Control Network supports various preprocessing types, such as doodle, depth, interpolation, pose, etc. Currently, doodle and interpolation images can be edited in JoyFusion, while others need to be imported as properly processed style images. Multiple control network entries can be provided and used simultaneously. Only models that support the Control Network and have the Control Network Plugin and Control Network Style Plugin installed can make use of this feature.

Initial Image

The initial image is chosen to adjust the generation of the new image in this project.

Negative Prompt

A textual description of elements or features that should not appear in the generated artwork, used as constraint conditions for the AI model's creation. This is to guide the AI's generation process and prevent the generated image from conflicting with its intent. Please provide the constraint in English if possible, as English descriptions currently yield better results.

Model

The choice of a pre-trained model for drawing will influence the style and effects of the artwork. All the models used in this application are based on the CoreML Stable Diffusion model (or other fine-tuned versions based on SD).

The original type of model provides better performance and can only be executed using GPU.

The split-einsum type of model is compatible with all options, and you can choose according to your own device. Whenever a model is switched, the first execution of drawing will require additional time overhead.

Steps

The number of iterations during the drawing process. Generally, more iterations result in finer images, but the AI drawing time will also increase accordingly.

Guidance Scale

Used to control the extent to which user input influences the drawing outcome. A higher guidance ratio will result in the drawing outcome being closer to the user's description or requirements.

Strength

Applicable only when an initial image is provided. This parameter controls the AI drawing effect. Higher values make the style and features of the drawing more prominent, but this might reduce the image's realism.

Sampler

Determines the sampling method used for generating images. It affects image diversity and quality. Different samplers produce different image effects. You can try different options to compare their effects.

Compute Units:

The computational resources required for CoreML tasks. For the "original" model type, you can use CPU & GPU. When using "split-einsum," you can choose between CPU & Neural Engine.

Number of Images:

Specifies how many images are generated in one drawing process. A larger number increases drawing time and resource consumption.

Preview Type

Controls the transitional effects in the generated images during the AI creation process. There are two options: "latent crossfade" and "denoise mode." These two preview types have different effects. You can disable this option if your device's performance is limited.

Seed

Using the same seed value generates the same image, while different seed values produce different images. This allows for reproducible experimentation while maintaining image diversity. A seed value of -1 represents random system-generated seed.

Random Number Type

Specifies the type of random number generator used for image generation. The choice of random number generator affects image diversity.

Reduce Memory Usage

Saves memory space during the execution of CoreML models on hardware, but it might slow down the generation speed.

Last updated